39 confident learning estimating uncertainty in dataset labels

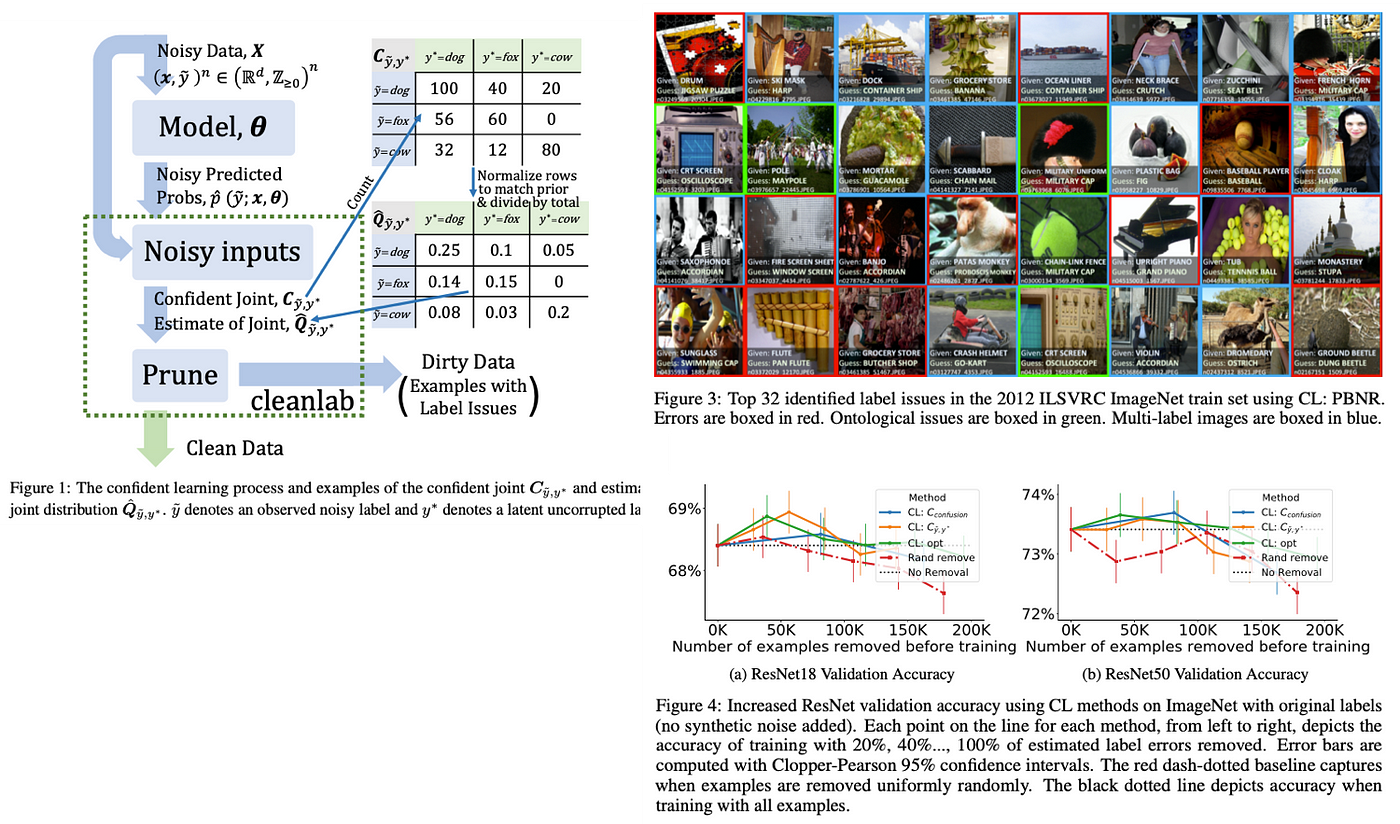

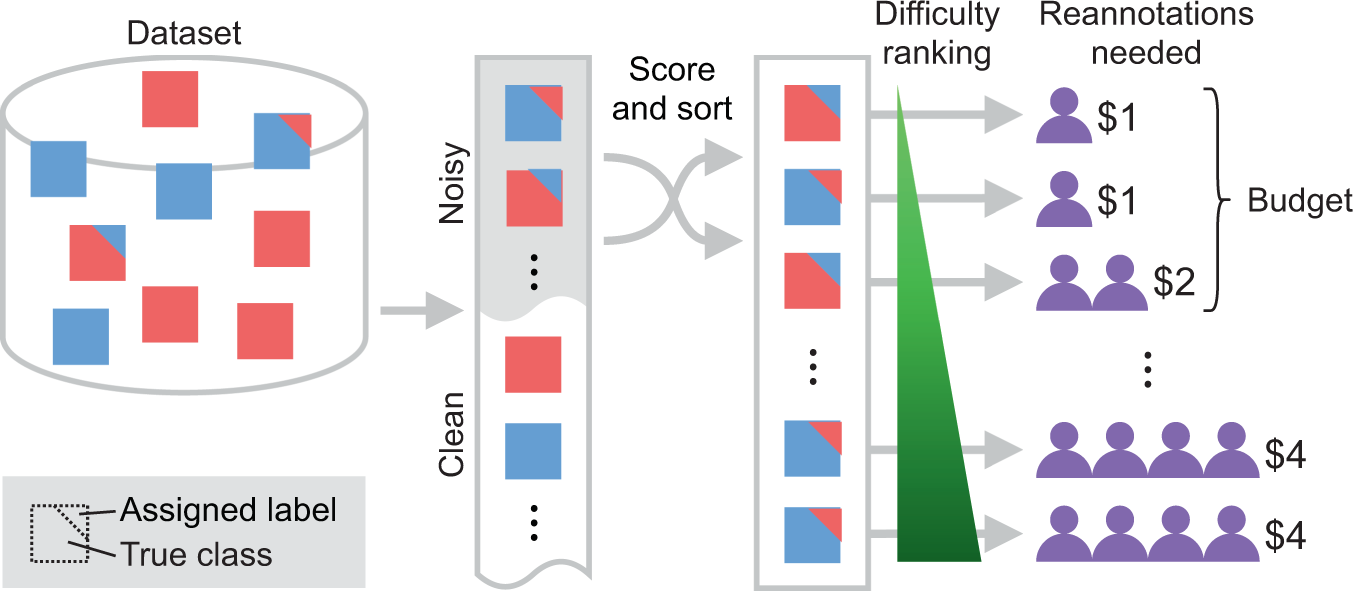

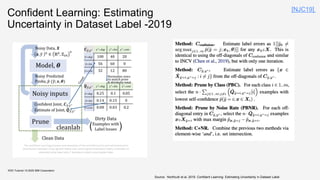

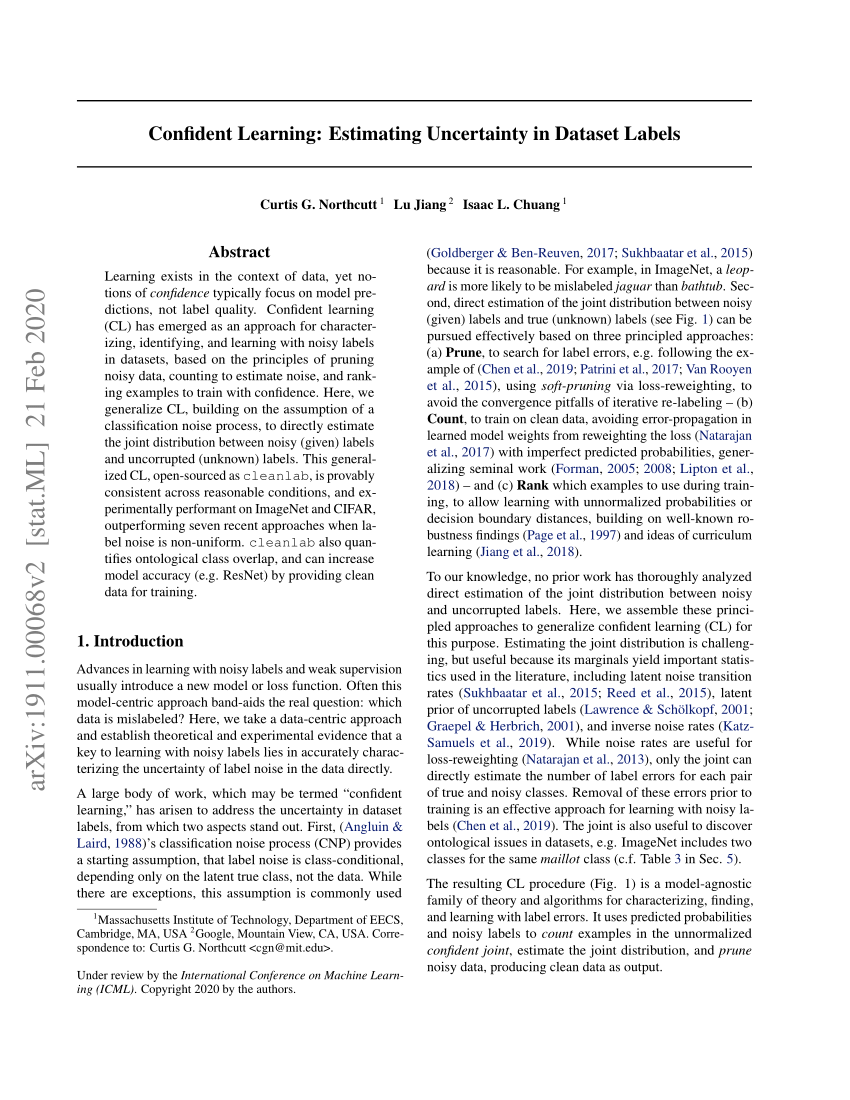

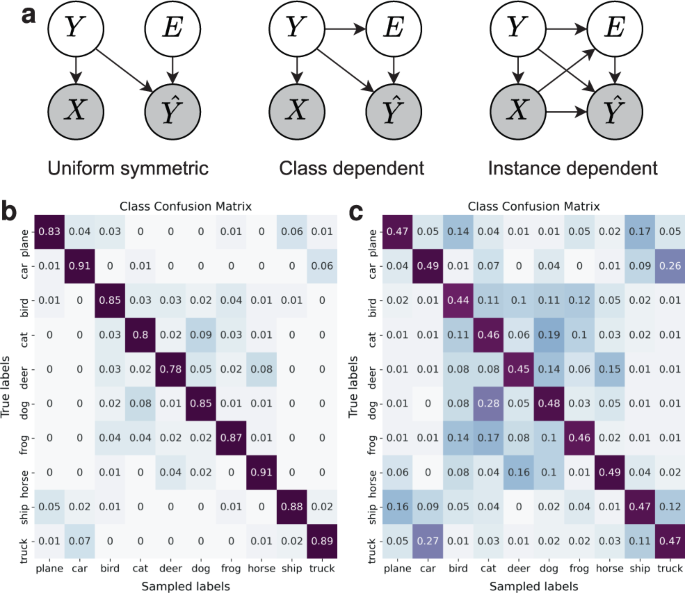

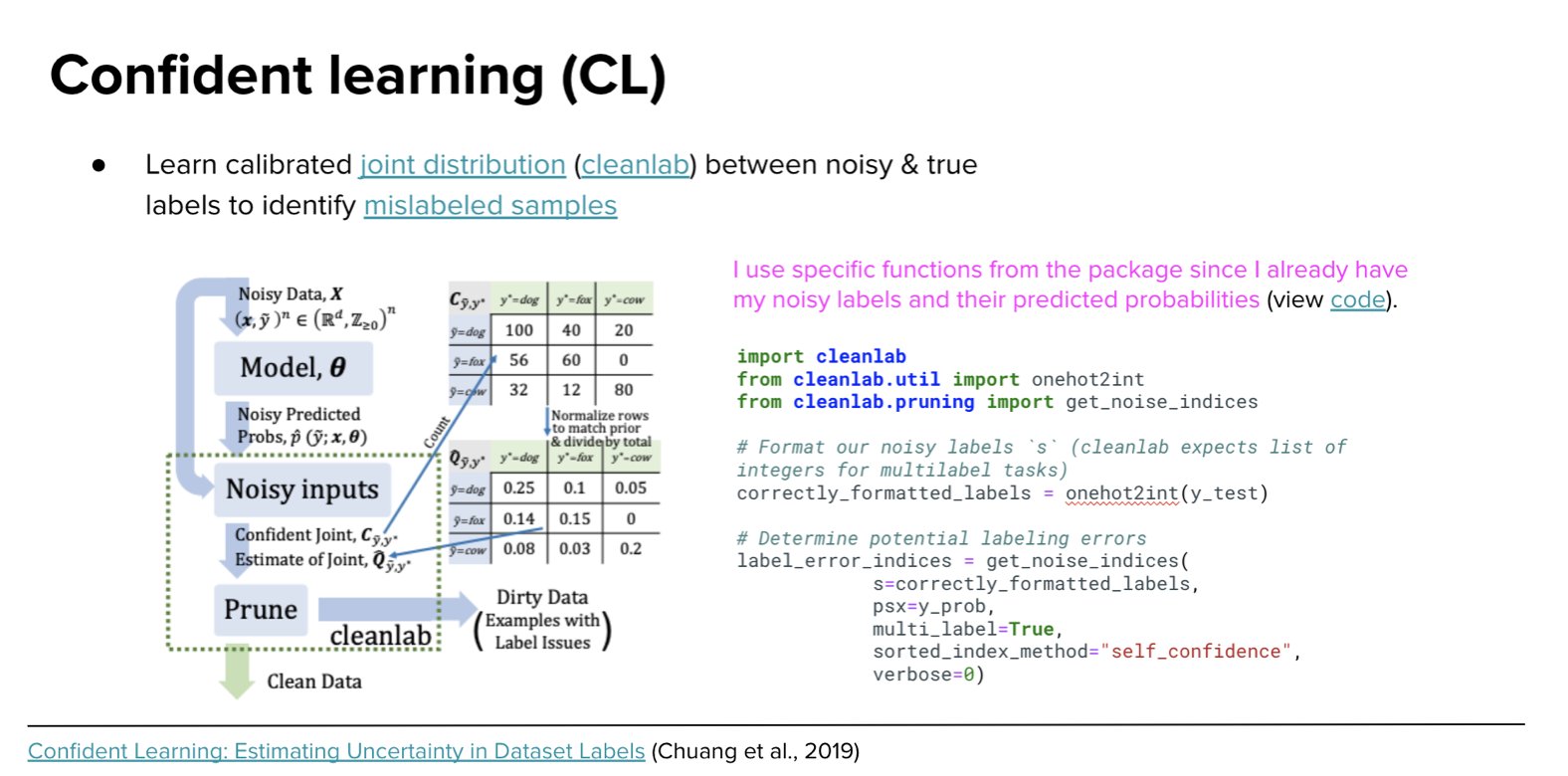

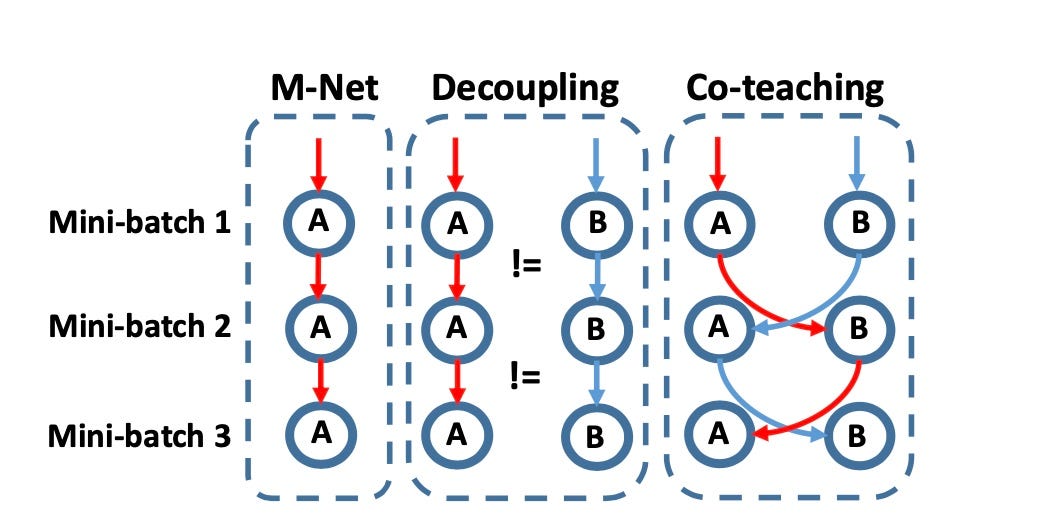

Data Noise and Label Noise in Machine Learning Aleatoric, epistemic and label noise can detect certain types of data and label noise [11, 12]. Reflecting the certainty of a prediction is an important asset for autonomous systems, particularly in noisy real-world scenarios. Confidence is also utilized frequently, though it requires well-calibrated models. Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence.

The NLP Index VerkkoThe dataset also provides image segmentation masks, which labels persuasion strategies in the corresponding ad images on the test split. We publicly release our code and dataset this https URL. Yaman Kumar Singla, Rajat Jha, Arunim Gupta, Milan Aggarwal, Aditya Garg, Ayush Bhardwaj, Tushar, Balaji Krishnamurthy, Rajiv Ratn Shah, Changyou Chen

Confident learning estimating uncertainty in dataset labels

4. Training Data - Designing Machine Learning Systems [Book] VerkkoChapter 4. Training Data. In Chapter 3, we covered how to handle data from the systems perspective.In this chapter, we’ll go over how to handle data from the data science perspective. Despite the importance of training data in developing and improving ML models, ML curricula are heavily skewed toward modeling, which is considered by … Confident Learning: Estimating Uncertainty in Dataset Labels by C Northcutt · 2021 · Cited by 222 — Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on ... Tag Page | L7 This post overviews the paper Confident Learning: Estimating Uncertainty in Dataset Labels authored by Curtis G. Northcutt, Lu Jiang, and Isaac L. Chuang. machine-learning confident-learning noisy-labels deep-learning

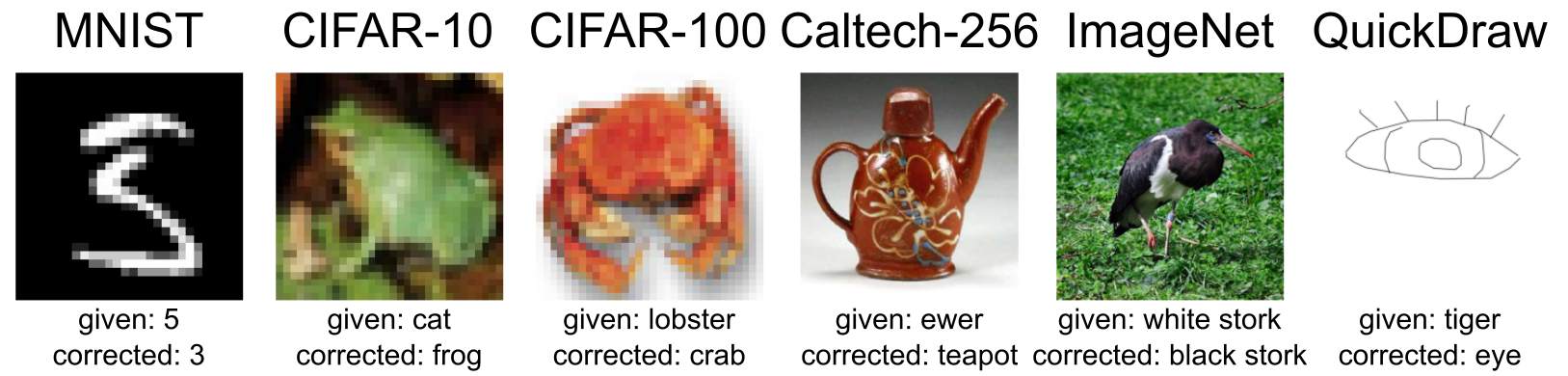

Confident learning estimating uncertainty in dataset labels. Confident Learning: Estimating Uncertainty in Dataset Labels by C Northcutt · 2021 · Cited by 224 — Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on ... Confident Learning -そのラベルは正しいか?- - 学習する天然ニューラルネット これは何? ICML2020に投稿された Confident Learning: Estimating Uncertainty in Dataset Labels という論文が非常に面白かったので、その論文まとめを公開する。 論文 [1911.00068] Confident Learning: Estimating Uncertainty in Dataset Labels 超概要 データセットにラベルが間違ったものがある(noisy label)。そういうサンプルを検出 ... Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. BibMe: Free Bibliography & Citation Maker - MLA, APA, Chicago, … VerkkoTake the uncertainty out of citing in APA format with our guide. Review the fundamentals of APA format and learn to cite several different source types using our detailed citation examples. Practical guide to Chicago syle. Using Chicago Style is easier once you know the fundamentals.

Confident Learning - Speaker Deck データの品質向上に使える Confident Learning についての解説資料です。実際に使ってみた事例は今後追加していければと思います。この資料は Money Forward 社内で開かれた MLOps についての勉強会のために作成しました。 ## Reference Pervasive Label Errors in Test Sets Destabilize Machine Learning Benchmarks ... Learning with noisy labels | Papers With Code Confident Learning: Estimating Uncertainty in Dataset Labels. cleanlab/cleanlab • • 31 Oct 2019. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to ... Confident Learning: Estimating Uncertainty in Dataset Labels by C Northcutt · 2021 · Cited by 225 — Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in ... GitHub - cleanlab/cleanlab: The standard data-centric AI package … Verkkocleanlab cleans your data's labels via state-of-the-art confident learning algorithms, published in this paper and ... , title={Confident Learning: Estimating Uncertainty in Dataset Labels}, author={Curtis G. Northcutt and Lu Jiang and Isaac L. Chuang}, journal={Journal of Artificial Intelligence Research (JAIR)}, volume={70 }, pages ...

(PDF) Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate... [R] Announcing Confident Learning: Finding and Learning with Label ... Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. Confident Learning: Estimating Uncertainty in Dataset Labels. (arXiv ... Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence. Whereas numerous studies have A guide to machine learning for biologists - Nature Sep 13, 2021 · In supervised machine learning, the relative proportions of each ground truth label in the dataset should also be considered, with more data required for machine learning to work if some labels ...

transferlearning/awesome_paper.md at master · jindongwang IEEE-TMM'22 Uncertainty Modeling for Robust Domain Adaptation Under Noisy Environments . Uncertainty modeling for domain adaptation 噪声环境下的domain adaptation; MM-22 Making the Best of Both Worlds: A Domain-Oriented Transformer for Unsupervised Domain Adaptation. Transformer for domain adaptation 用transformer进行DA

Hands on Machine Learning with Scikit Learn Keras and … VerkkoOne must be aware of this as part of the research and development process. 16.1.1 Which Parameters to Optimise? A statistical-based algorithmic trading model will often have many parameters and different measures of performance. An underlying statistical learning algorithm will have its own set of parameters.

Find label issues with confident learning for NLP Estimate noisy labels We use the Python package cleanlab which leverages confident learning to find label errors in datasets and for learning with noisy labels. Its called cleanlab because it CLEAN s LAB els. cleanlab is: fast - Single-shot, non-iterative, parallelized algorithms

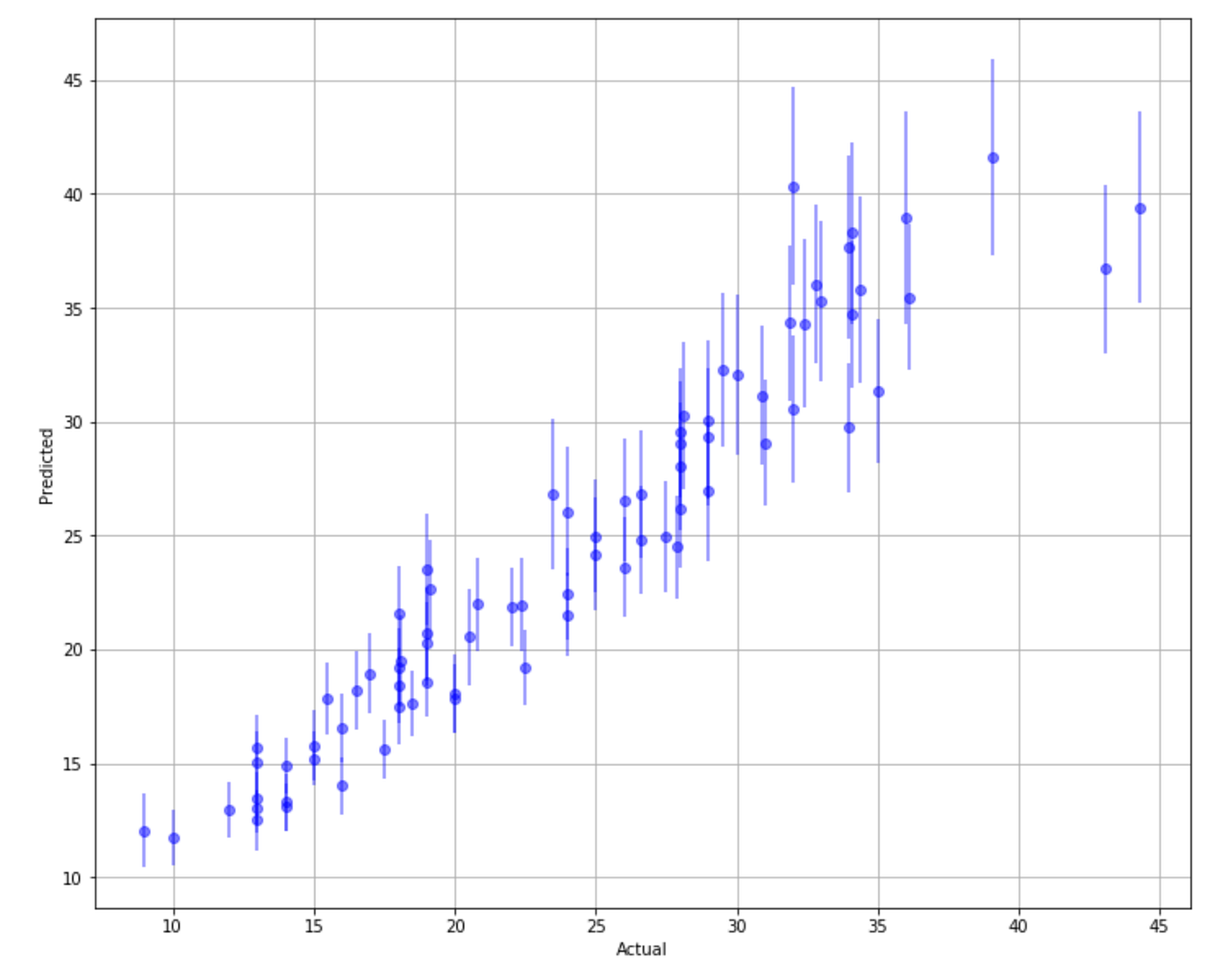

Regression Tutorial with the Keras Deep Learning Library in ... Jun 08, 2016 · 1. Monitor the performance of the model on the training and a standalone validation dataset. (even plot these learning curves). When skill on the validation set goes down and skill on training goes up or keeps going up, you are overlearning. 2. Cross validation is just a method for estimating the performance of a model on unseen data.

Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence.

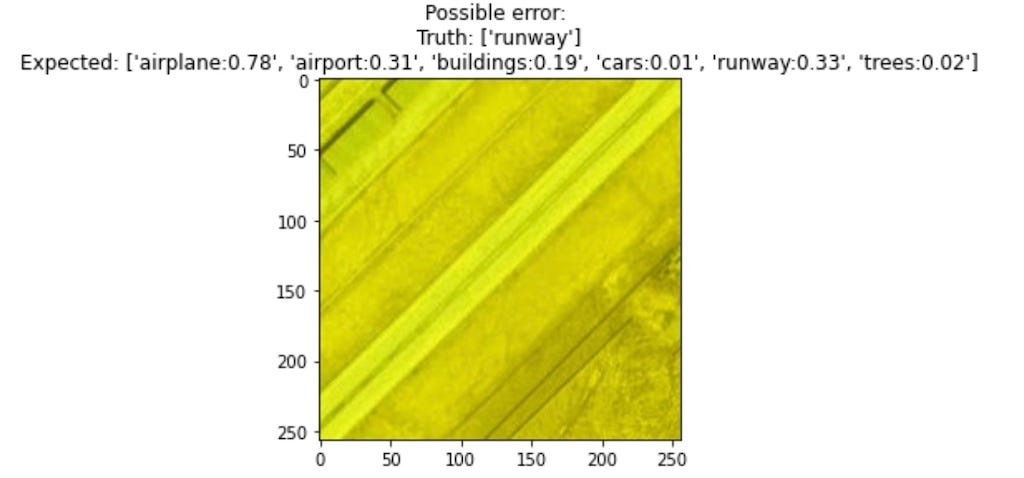

An Introduction to Confident Learning: Finding and Learning with Label ... An Introduction to Confident Learning: Finding and Learning with Label Errors in Datasets Curtis Northcutt Mod Justin Stuck • 3 years ago Hi Thanks for the questions. Yes, multi-label is supported, but is alpha (use at your own risk). You can set `multi-label=True` in the `get_noise_indices ()` function and other functions.

《Confident Learning: Estimating Uncertainty in Dataset Labels》论文讲解 深度贝叶斯学习: In this work we develop tools to obtain practical uncertainty estimates in deep learning, casting recent deep learning tools as Bayesian models without changing either the models or the optimisation.In the first part of this thesis we develop the theory for such tools, providing applications and illustrative examples.We tie approximate inference in Bayesian models to ...

A mathematical programming approach to SVM-based … Verkko30.8.2022 · For instance, in the recent method presented in Northcutt, Jiang, and Chuang (2019), based in the so-called Support Vector Machine with Confident Learning (SVM-CL) approach, the authors propose a probabilistic method in three sequential phases: (1) estimate the transition matrix of class-conditional label noise, (2) filter out noisy …

Confident Learning: : Estimating Uncertainty in Dataset Labels 14 Apr 2021 — Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in ...

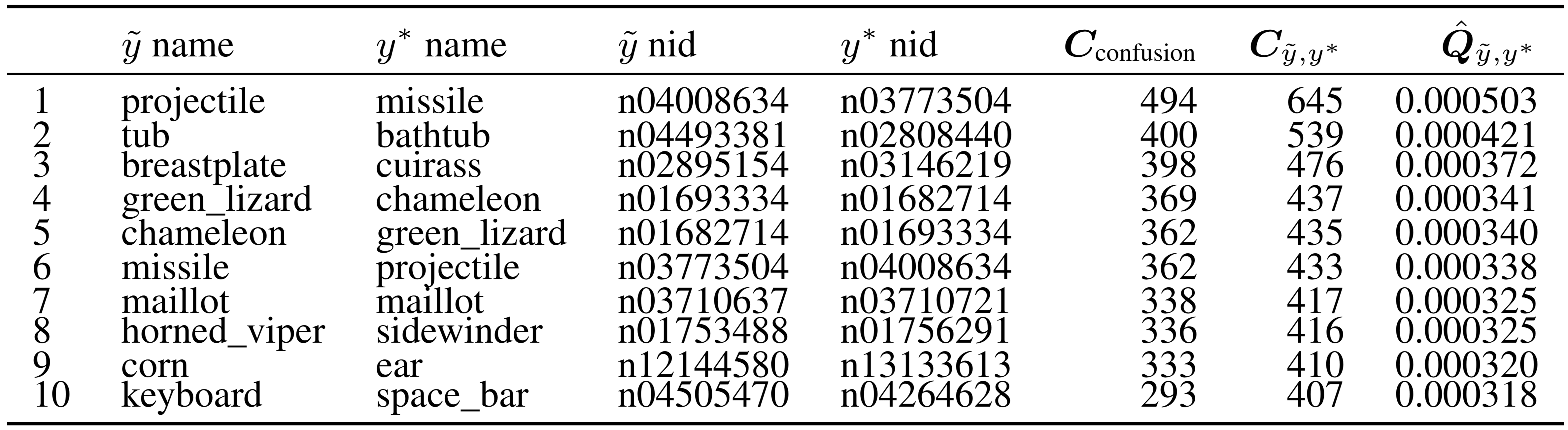

Confident Learning: : Estimating ... Confident Learning: Estimating Uncertainty in Dataset Labels theCIFARdataset. TheresultspresentedarereproduciblewiththeimplementationofCL algorithms,open-sourcedasthecleanlab1Pythonpackage. Thesecontributionsarepresentedbeginningwiththeformalproblemspecificationand notation(Section2),thendefiningthealgorithmicmethodsemployedforCL(Section3)

Are Label Errors Imperative? Is Confident Learning Useful? Confident learning (CL) is a class of learning where the focus is to learn well despite some noise in the dataset. This is achieved by accurately and directly characterizing the uncertainty of label noise in the data. The foundation CL depends on is that Label noise is class-conditional, depending only on the latent true class, not the data 1.

Book - NIPS VerkkoBeyond Value-Function Gaps: Improved Instance-Dependent Regret Bounds for Episodic Reinforcement Learning Christoph Dann, Teodor Vanislavov Marinov, Mehryar Mohri, Julian Zimmert; Learning One Representation to Optimize All Rewards Ahmed Touati, Yann Ollivier; Matrix factorisation and the interpretation of geodesic distance Nick …

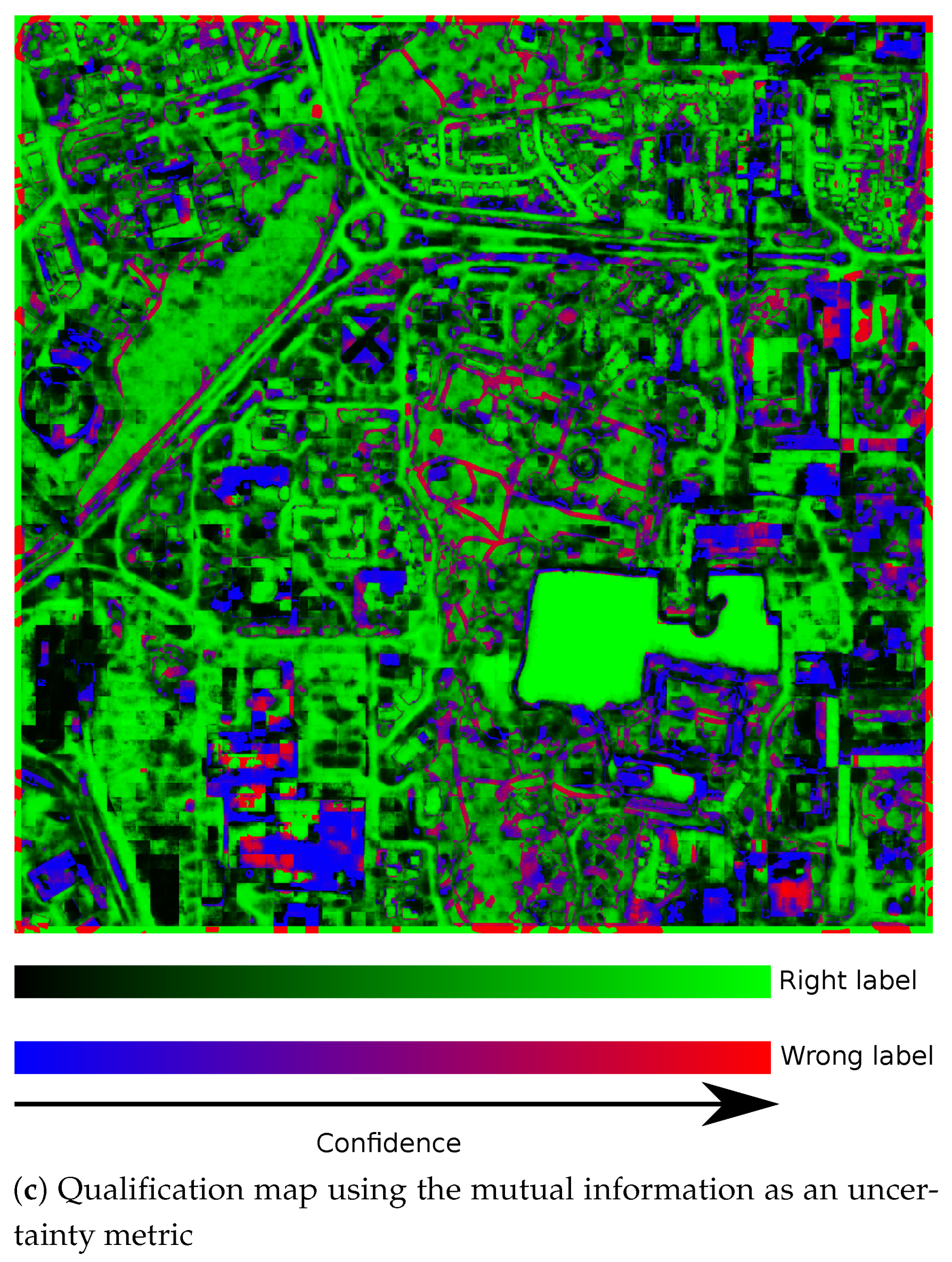

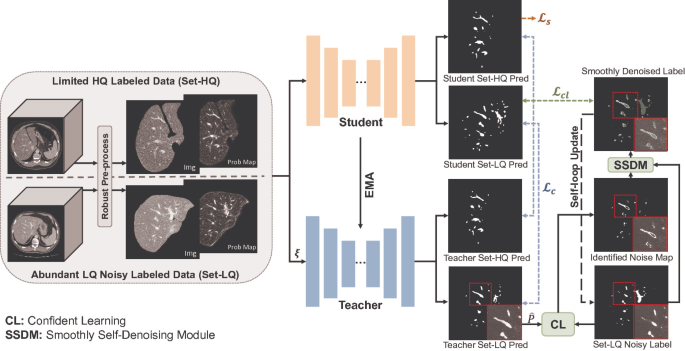

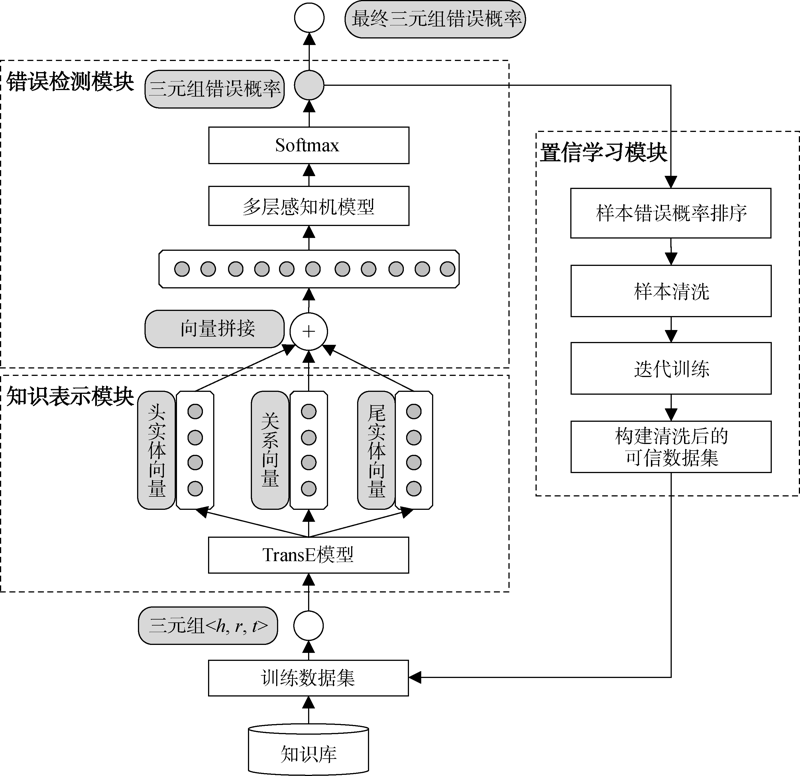

Characterizing Label Errors: Confident Learning for Noisy-Labeled Image ... 2.2 The Confident Learning Module. Based on the assumption of Angluin , CL can identify the label errors in the datasets and improve the training with noisy labels by estimating the joint distribution between the noisy (observed) labels \(\tilde{y}\) and the true (latent) labels \({y^*}\). Remarkably, no hyper-parameters and few extra ...

PDF Confident Learning: Estimating Uncertainty in Dataset Labels - ResearchGate Confident learning estimates the joint distribution between the (noisy) observed labels and the (true) latent labels and can be used to (i) improve training with noisy labels, and (ii) identify...

Chipbrain Research | ChipBrain | Boston Confident Learning: Estimating Uncertainty in Dataset Labels By Curtis Northcutt, Lu Jiang, Isaac Chuang. Learning exists in the context of data, yet notions of confidence typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and ...

Explainable Artificial Intelligence (XAI): Concepts, taxonomies ... Verkko1.6.2020 · Fig. 1 displays the rising trend of contributions on XAI and related concepts. This literature outbreak shares its rationale with the research agendas of national governments and agencies. Although some recent surveys , , , , , , summarize the upsurge of activity in XAI across sectors and disciplines, this overview aims to cover the …

Tag Page | L7 This post overviews the paper Confident Learning: Estimating Uncertainty in Dataset Labels authored by Curtis G. Northcutt, Lu Jiang, and Isaac L. Chuang. machine-learning confident-learning noisy-labels deep-learning

Confident Learning: Estimating Uncertainty in Dataset Labels by C Northcutt · 2021 · Cited by 222 — Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on ...

4. Training Data - Designing Machine Learning Systems [Book] VerkkoChapter 4. Training Data. In Chapter 3, we covered how to handle data from the systems perspective.In this chapter, we’ll go over how to handle data from the data science perspective. Despite the importance of training data in developing and improving ML models, ML curricula are heavily skewed toward modeling, which is considered by …

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/25-Figure9-1.png)

![R] Announcing Confident Learning: Finding and Learning with ...](https://external-preview.redd.it/p3HQXQzdkmyXyJ89enL6PkgmSdstFY5z1QkzOzRNUaU.jpg?auto=webp&s=01b29ed2d2e90d072e1fc7295da2c1cb3797f686)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/24-Figure7-1.png)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/29-Figure16-1.png)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/8-Table2-1.png)

Post a Comment for "39 confident learning estimating uncertainty in dataset labels"